시작하기 전에

- 실습에서는 정해진 기간 동안 Google Cloud 프로젝트와 리소스를 만듭니다.

- 실습에는 시간 제한이 있으며 일시중지 기능이 없습니다. 실습을 종료하면 처음부터 다시 시작해야 합니다.

- 화면 왼쪽 상단에서 실습 시작을 클릭하여 시작합니다.

Create Pub/sub topics and biqquery datset

/ 30

Create a Beam Python Pipeline with Beam ML and Run the Pipeline

/ 30

Please add another model for A/B testing and run the pipeline and Check the Comparisions in Bigquery

/ 40

Toxicity is a real problem in the gaming industry. In this lab, we build a real-time pipeline that catches offenders (and be the real hero, in real-time!).

Apache Beam is an open-source, unified programming model for batch and streaming data processing pipelines that simplifies large-scale data processing dynamics. Google Cloud Dataflow is a managed service for running a wide variety of data processing patterns with Beam.

Real-time intelligence lets you act on your data instantaneously. With Beam ML, you can use your model to run inference and predictions, giving you a result that you can work with.

Toxicity can happen in many ways, and chat is one of them. This pipeline is trained on chat data to identify toxic messages. The neat thing about this setup is that you can apply the same steps to different applications, such as fraud, supply chain, and so on. Just swap out the model, and off you go.

This lab is at an intermediate level. You should be familiar with Python and the Beam model; however, if needed, you can reference the guide and fully written code samples along the way.

For each lab, you get a new Google Cloud project and set of resources for a fixed time at no cost.

Sign in to Qwiklabs using an incognito window.

Note the lab's access time (for example, 1:15:00), and make sure you can finish within that time.

There is no pause feature. You can restart if needed, but you have to start at the beginning.

When ready, click Start lab.

Note your lab credentials (Username and Password). You will use them to sign in to the Google Cloud Console.

Click Open Google Console.

Click Use another account and copy/paste credentials for this lab into the prompts.

If you use other credentials, you'll receive errors or incur charges.

Accept the terms and skip the recovery resource page.

Cloud Shell is a virtual machine that contains development tools. It offers a persistent 5-GB home directory and runs on Google Cloud. Cloud Shell provides command-line access to your Google Cloud resources. gcloud is the command-line tool for Google Cloud. It comes pre-installed on Cloud Shell and supports tab completion.

Click the Activate Cloud Shell button (

Click Continue.

It takes a few moments to provision and connect to the environment. When you are connected, you are also authenticated, and the project is set to your PROJECT_ID.

(Output)

(Example output)

(Output)

(Example output)

The full code is in part2.py. We have broken it down into part1.py and part2.py as per the lab tasks.

You will see the template versions of the part1.py and part2.py code, which means there is missing code (labeled with # TODO) that you need to build by following the steps in the rest of this lab.

Click Check my progress to verify the objective.

In this task, you create a Python Beam Pipeline that reads in a message submitted to Pub/Sub and makes a prediction on whether the message is toxic or not.

Click on Open Editor on the top of your Cloud Shell window to launch the Cloud Shell Editor. You will use this editor to create your pipeline code.

Once the editor is launched, use the file explorer navigation on the left to navigate to training_data_analyst > quests > getting_started_apache_beam > beam_ml_toxicity_in_gaming > exercises

Click on the file part1.py to open it in the editor. Now you are ready to add code to the file to build your pipeline

For simplicity, we use the built-in source, ReadFromPubSub.

We want to join the element in the second half of the lab so we need to apply a window to the incoming message as this is a streaming pipeline.

Again, for simplicity, we use a fixed window.

We want to tag the element with a key so it can be identified as needed. We use the attribute in the example.

Essentially, you want to output a tuple (key, element).

In the example, we decode the string. If you plan to use it as a string, you also need to decode the string; otherwise, leave it in the binary form.

You need to create a model handler to instantiate your model. We use a KeyedModelHandler, because we have a tuple with a key.

The KeyedModelHandler automatically helps you handle the key, so you don't need to worry about parsing it.

Submit your message to the model for your prediction. There's really nothing extra that you need to do here as long as your model takes in the expected input.

Parse the prediction output from the model using the PredictionResult object.

In this example, we use a simple method to illustrate that the results are simply objects that you can manipulate and use as necessary. Assign an arbitrary value to determine if a message is toxic or not. Tag the results with a not for a not-nice message and nice for a nice message.

We want to only submit the toxic messages to the output topic, for possibly additional action.

Use the Beam primitive Filter to get all of the not keys.

Submit the flagged messages to Pub/Sub.

You can also provide arguments to parse, or you can code them into your pipeline.

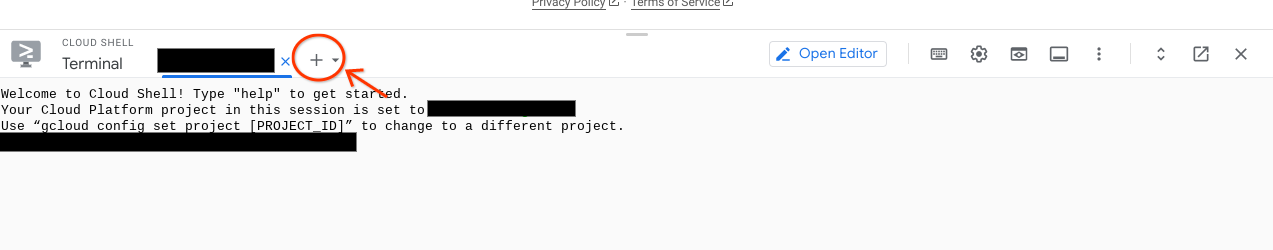

Use the + sign at the top of the console to open another terminal. In the new terminal, submit the messages.

Click Check my progress to verify the objective.

Open the part2.py file in your exercises folder and use it as a starting point to build the Python pipeline. The individual steps are labeled in the comments. There are some steps with multiple snippets of code, ensure you reference them all.

In this task, you add another model to compare model results. You can test multiple models and see which is best suited for your needs. You also send the results to BigQuery, where you can compare them.

Create another model handler, like we did earlier in the lab.

Use the KeyedModelHandler to either hardcode the path (./movie_trained) or provide a variable.

Submit the input to the new model.

Remember that you forked the pipeline, so reuse that object to continue your pipeline.

We want to compare the results eventually, so we need to combine the results.

To join your results together, use a CoGroupByKey. You need to collate the results of the two PCollections (from movie and gaming) together.

In a real-world scenario, you want to parse the results and store them properly with a schema.

To make this lab shorter, we're going to take the entire joined result, cast it into a giant string, and store it in BigQuery.

Write your results to BigQuery in the code, you can use the built-in IO WriteToBigQuery.

Use a write method, such as STREAMING_INSERTS, to write into BigQuery.

Then, run the pipeline with the following command in the terminal in cloud shell. If you receive an error, please ensure you are in the correct directory (~/devrel-demos/data-analytics/beam_ml_toxicity_in_gaming). Note that you should not expect an output after this step.:

You can test the pipeline by submitting some more messages.

We reuse the messages from above.

Use the + sign at the top of the console to open another terminal. In the new terminal, submit the messages.

Use the following query to pull the data from BigQuery and check the comparison. Note that you may have to wait a few minutes for the BigQuery table to populate.

Click Check my progress to verify the objective.

When you have completed your lab, click End Lab. Qwiklabs removes the resources you’ve used and cleans the account for you.

You will be given an opportunity to rate the lab experience. Select the applicable number of stars, type a comment, and then click Submit.

The number of stars indicates the following:

You can close the dialog box if you don't want to provide feedback.

For feedback, suggestions, or corrections, please use the Support tab.

Congratulations, you've now learned how to use ML in Beam.

You can now customize your pipelines to best suit your needs.

Copyright 2022 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.

현재 이 콘텐츠를 이용할 수 없습니다

이용할 수 있게 되면 이메일로 알려드리겠습니다.

감사합니다

이용할 수 있게 되면 이메일로 알려드리겠습니다.

한 번에 실습 1개만 가능

모든 기존 실습을 종료하고 이 실습을 시작할지 확인하세요.