Checkpoints

Create a Cloud Dataproc cluster (region: us-east1)

/ 5

Submit a Spark job to your cluster (region: us-east1)

/ 5

Introduction to Cloud Dataproc: Hadoop and Spark on Google Cloud

GSP123

Overview

Cloud Dataproc is a managed Spark and Hadoop service that lets you take advantage of open source data tools for batch processing, querying, streaming, and machine learning. Cloud Dataproc automation helps you create clusters quickly, manage them easily, and save money by turning clusters off when you don't need them. With less time and money spent on administration, you can focus on your jobs and your data.

This lab is adapted from the Create a Dataproc cluster by using the Google Cloud console guide.

What you'll learn

- How to create a managed Cloud Dataproc cluster (with Apache Spark pre-installed).

- How to submit a Spark job

- How to shut down your cluster

What you'll need

A Browser, such as Chrome or Firefox

Setup and requirements

Before you click the Start Lab button

Read these instructions. Labs are timed and you cannot pause them. The timer, which starts when you click Start Lab, shows how long Google Cloud resources will be made available to you.

This hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

- Access to a standard internet browser (Chrome browser recommended).

- Time to complete the lab---remember, once you start, you cannot pause a lab.

How to start your lab and sign in to the Google Cloud console

-

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is the Lab Details panel with the following:

- The Open Google Cloud console button

- Time remaining

- The temporary credentials that you must use for this lab

- Other information, if needed, to step through this lab

-

Click Open Google Cloud console (or right-click and select Open Link in Incognito Window if you are running the Chrome browser).

The lab spins up resources, and then opens another tab that shows the Sign in page.

Tip: Arrange the tabs in separate windows, side-by-side.

Note: If you see the Choose an account dialog, click Use Another Account. -

If necessary, copy the Username below and paste it into the Sign in dialog.

{{{user_0.username | "Username"}}} You can also find the Username in the Lab Details panel.

-

Click Next.

-

Copy the Password below and paste it into the Welcome dialog.

{{{user_0.password | "Password"}}} You can also find the Password in the Lab Details panel.

-

Click Next.

Important: You must use the credentials the lab provides you. Do not use your Google Cloud account credentials. Note: Using your own Google Cloud account for this lab may incur extra charges. -

Click through the subsequent pages:

- Accept the terms and conditions.

- Do not add recovery options or two-factor authentication (because this is a temporary account).

- Do not sign up for free trials.

After a few moments, the Google Cloud console opens in this tab.

Permission to Service Account

To assign storage permission to the service account, which is required for creating a cluster:

-

Go to Navigation menu > IAM & Admin > IAM.

-

Click the pencil icon on the

compute@developer.gserviceaccount.comservice account. -

click on the + ADD ANOTHER ROLE button. select role Storage Admin

Once you've selected the Storage Admin role, click on Save

Task 1. Create a Cloud Dataproc cluster

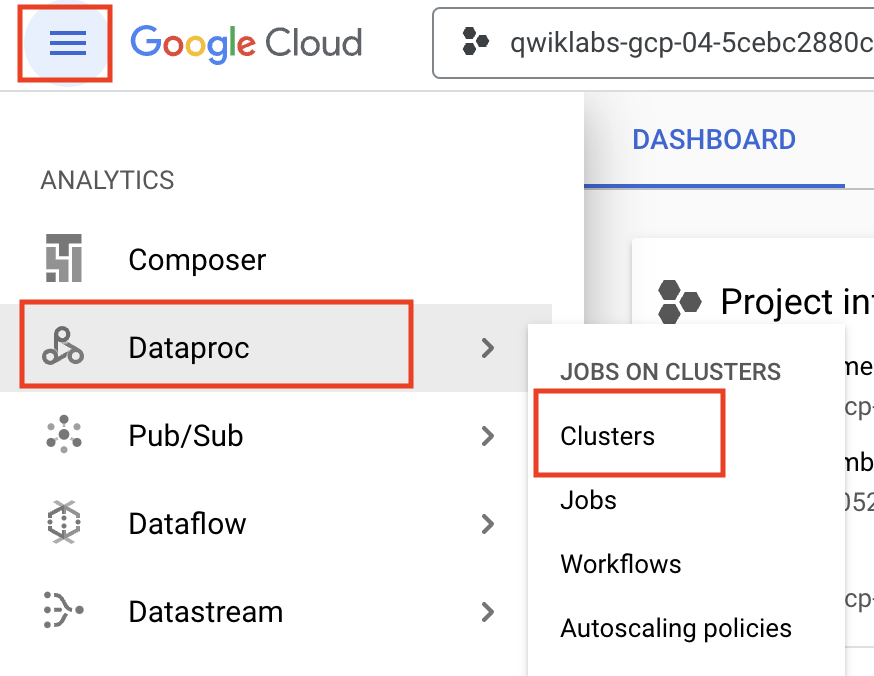

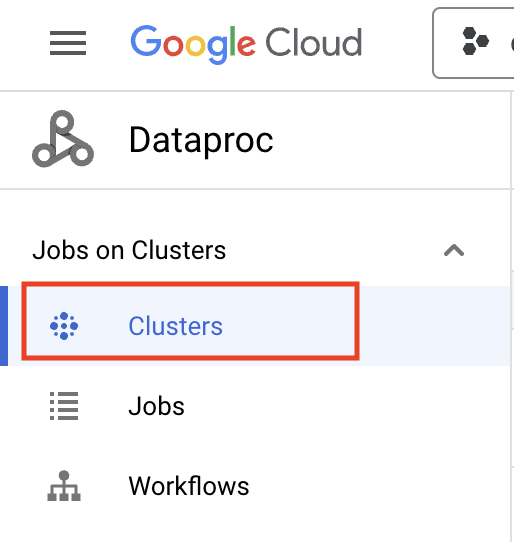

- In the console, click Navigation menu > Dataproc > Clusters on the top left of the screen:

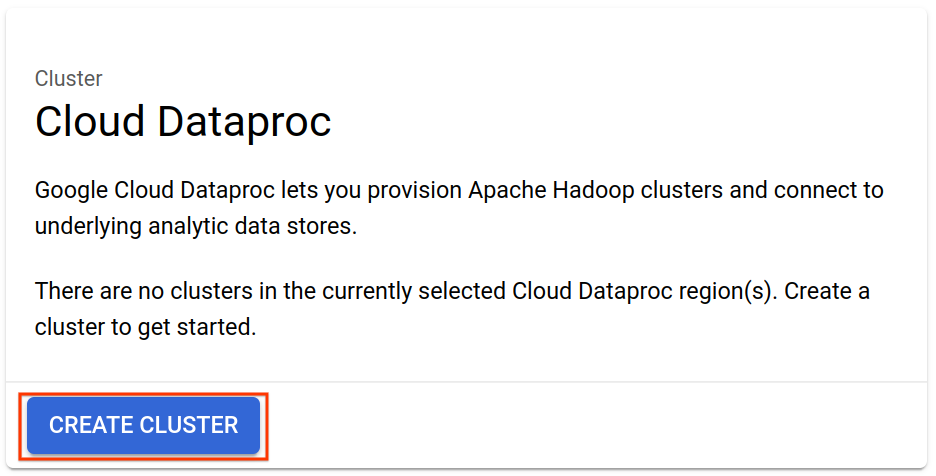

- To create a new cluster, click Create cluster and for Cluster on Compute Engine click Create.

- There are many parameters you can configure when creating a new cluster. Set values for the parameters listed below, leave the default settings for the other parameters:

| Parameter | Value |

|---|---|

| Name | |

| Region | |

| Zone | |

| Click Configure nodes, for Manager node - Machine type | |

| Worker node - Machine type | |

| Worker node - Primary disk size | 100 |

| Worker node - Primary disk type | Standard Persistent Disk |

| Click Customize cluster, for Internal IP only | Uncheck Configure all instances to have only internal IP addresses |

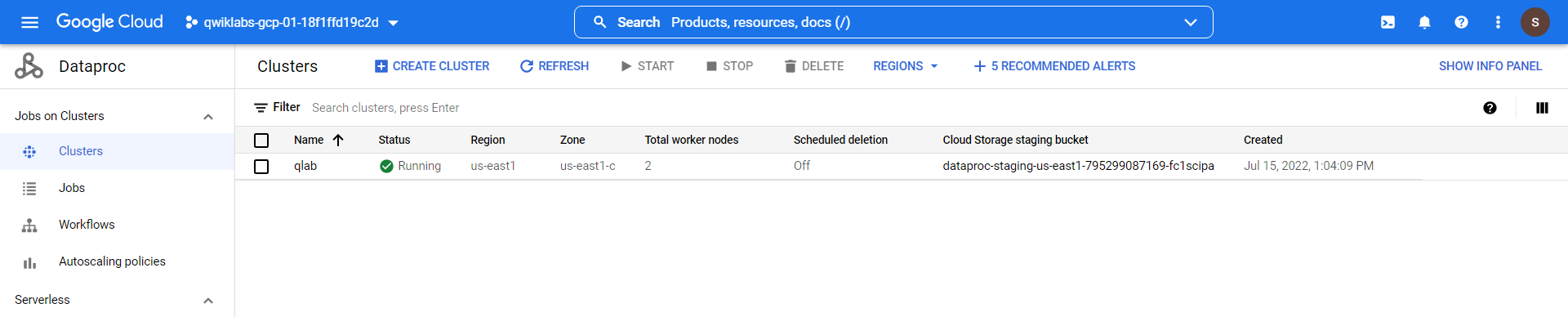

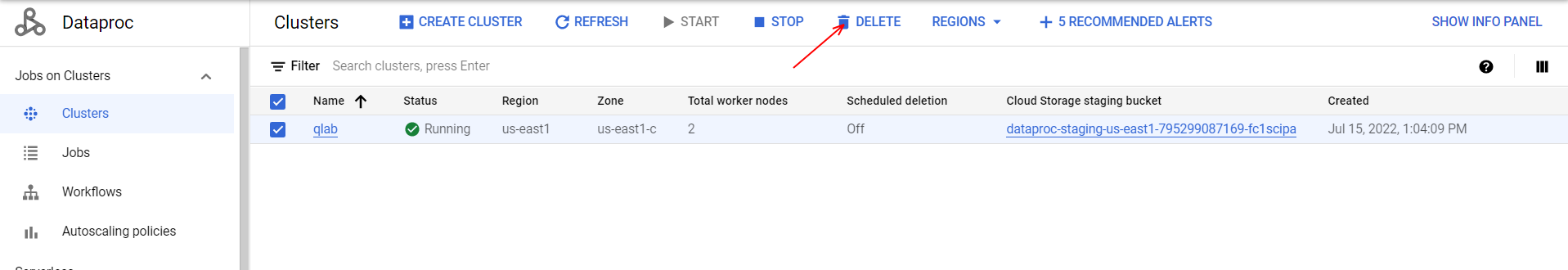

- Click on Create to create the new cluster. You will see the Status go from Provisioning to Running and move on to the next step once your output resembles the following:

Test completed task

Click Check my progress to verify your performed task. If you have completed the task successfully you will be granted with an assessment score.

Task 2. Submit a Spark job to your cluster

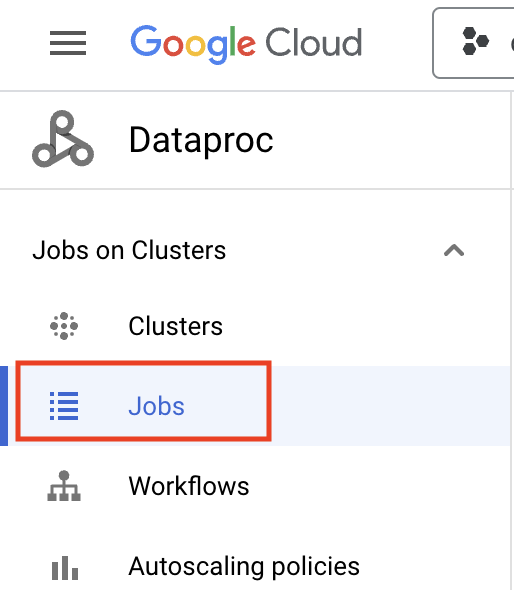

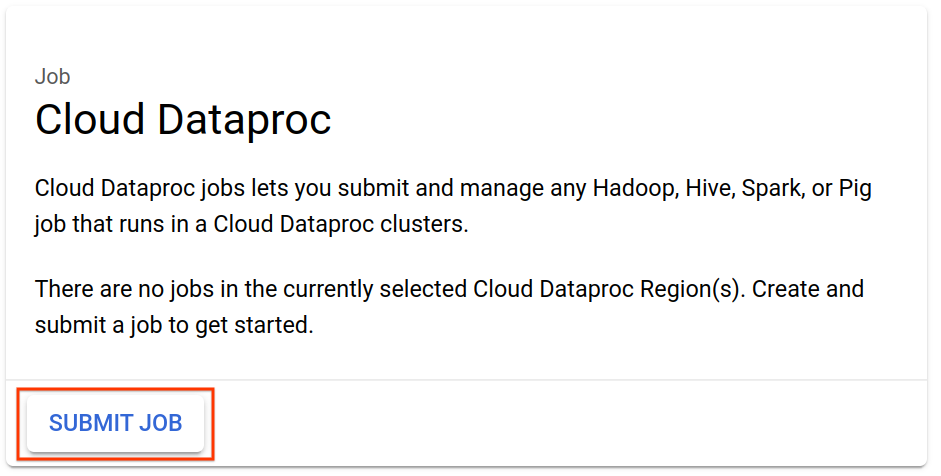

- Select Jobs to switch to Dataproc's jobs view:

- Click Submit job:

- Set values for the parameters listed below, leave the default settings for the other parameters:

| Parameter | Value |

|---|---|

| Region | |

| Cluster | |

| Job type | |

| Main class or jar | |

| Jar files | |

| Arguments |

- Click Submit.

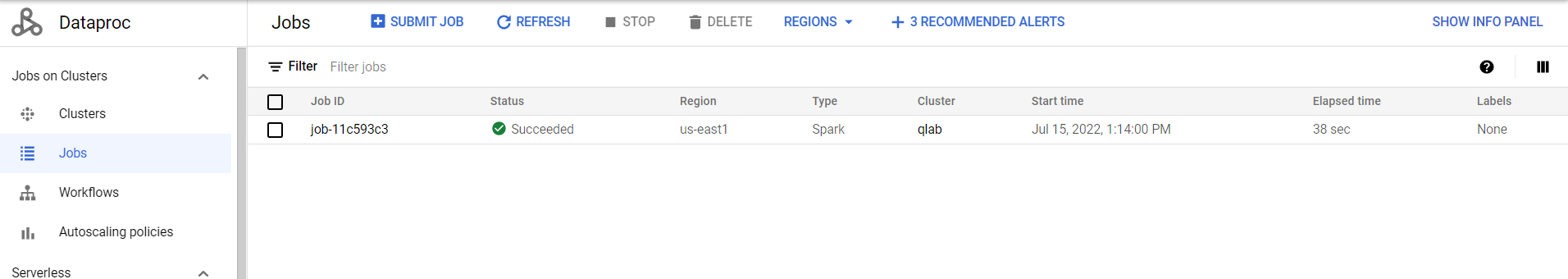

Your job should appear in the Jobs list, which shows all your project's jobs with their cluster, type, and current status. The new job displays as "Running"—move on once you see "Succeeded" as the Status.

Test completed task

Click Check my progress to verify your performed task. If you have completed the task successfully you will granted with an assessment score.

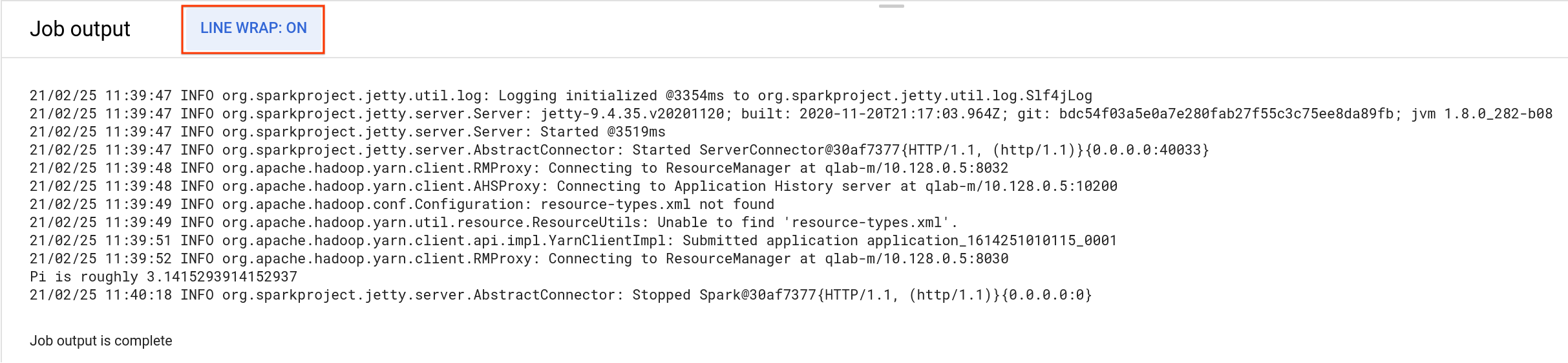

- To see your completed job's output, click the job ID in the Jobs list:

- To avoid scrolling, select Line Wrap to ON:

You should see that your job has successfully calculated a rough value for pi!

Task 3. Shut down your cluster

- You can shut down a cluster on the Clusters page:

- Select the checkbox next to the qlab cluster and click Delete:

- Click CONFIRM to confirm deletion.

Task 4. Test your understanding

Below are multiple-choice questions to reinforce your understanding of this lab's concepts. Answer them to the best of your abilities.

Congratulations!

You learned how to create a Dataproc cluster, submit a Spark job, and shut down your cluster!

Next steps / learn more

Continue your Google Cloud learning with these suggestions:

- Learn more about Dataproc by exploring Dataproc Documentation.

- Take more labs, for example Provisioning and Using a Managed Hadoop/Spark Cluster with Cloud Dataproc (Command Line).

- Start a Quest! A quest is a series of related labs that form a learning path. Completing a quest earns you a digital badge, to recognize your achievement. You can make your badge (or badges) public and link to them in your online resume or social media account. See available quests.

Google Cloud training and certification

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Manual Last Updated April 16, 2024

Lab Last Tested April 16, 2024

Copyright 2024 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.