准备工作

- 实验会创建一个 Google Cloud 项目和一些资源,供您使用限定的一段时间

- 实验有时间限制,并且没有暂停功能。如果您中途结束实验,则必须重新开始。

- 在屏幕左上角,点击开始实验即可开始

FraudFinder is a series of notebooks to show how an end-to-end Data to AI architecture works on Google Cloud, through a toy use case of real-time fraud detection system.

FraudFinder represents a golden Data to AI workshop to show an end-to-end architecture from raw data to MLOps, through the use case of real-time fraud detection. Fraudfinder is a series of labs to showcase the comprehensive Data to AI journey on Google Cloud, through the use case of real-time fraud detection. Throughout the Fraudfinder labs, you will learn how to read historical payment transactions data stored in a data warehouse, read from a live stream of new transactions, perform exploratory data analysis (EDA), do feature engineering, ingest features into a Vertex AI Feature Store, train a model using Feature Store, register your model in a model registry, evaluate your model, deploy your model to an endpoint, do real-time inference on your model with Feature Store, and monitor your model. Data to AI is the process of using AI/ML on data to generate insights, inform decision-making, and to augment downstream applications.

Imagine that you've just joined Cymbal Bank, and you've been asked to design and create an end-to-end fraud detection solution using Google Cloud.

This hands-on lab will walk you through the entire end-to-end architecture across a series of notebooks.

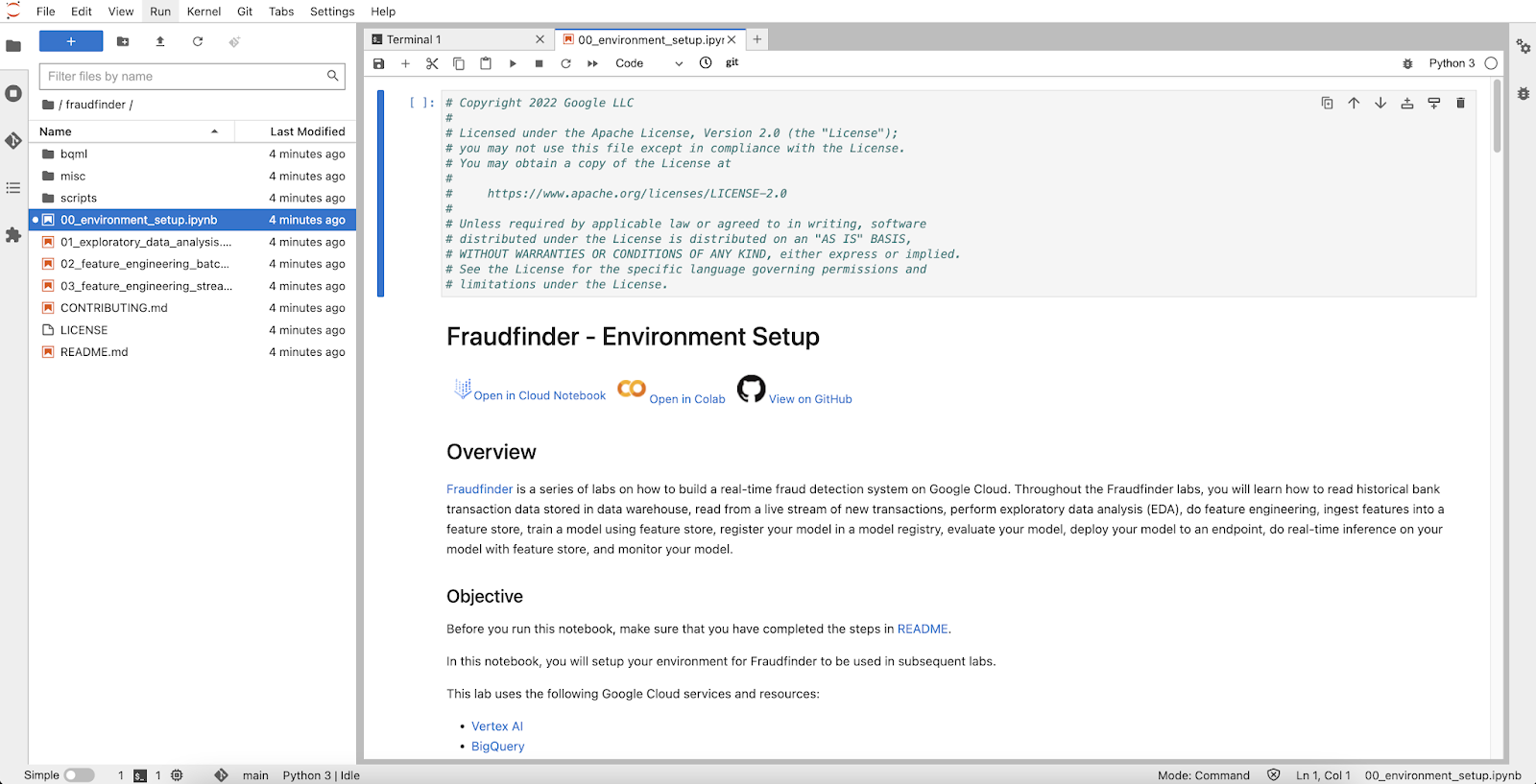

This lab is organized across various notebooks as:

| Notebook | Description |

|---|---|

| 00_environment_setup.ipynb | Setting up the data and checking to make sure you can query the data. |

| 01_exploratory_data_analysis.ipynb | Exploratory data analysis of historic bank transactions stored in BigQuery. |

| 02_feature_engineering_batch.ipynb | This notebook shows how to generate new features on bank transactions by customer and terminal over the last n days, by doing batch feature engineering in SQL with BigQuery. |

| 03_feature_engineering_streaming.ipynb | Computing features based on the last n minutes, you will use streaming-based feature engineering using Dataflow. |

After feature engineering, you can take either of the following paths for model training and MLOps:

BigQuery ML (BQML) enables users to create and execute machine learning models in BigQuery using GoogleSQL queries. Learn more. If you would prefer to learn how to train a model using Python packages for machine learning, such as xgboost, then skip this section and move onto the next section on "Vertex AI Custom Training".

| Notebook | Description |

|---|---|

| bqml/04_model_training_and_prediction.ipynb | In this notebook, using the data in Vertex AI Feature Store that you previously ingested data into, you will train a model using BigQuery ML, register the model to Vertex AI Model Registry, and deploy it to an endpoint for real-time prediction. |

| bqml/05_model_training_pipeline_formalization.ipynb | Train and deploy a Logistic Regression model using BQML, register the model with Model Registry & Create a Vertex AI Endpoint & upload the BQML to the endpoint. |

| bqml/06_model_deployment.ipynb | In this notebook, you learn to set up the Vertex AI Model Monitoring service to detect feature skew and drift in the input predict requests. |

| bqml/07_model_inference.ipynb | In this notebook, you will create a Cloud Run app to perform model inference on the endpoint deployed in the previous notebooks. |

Vertex AI custom training enables users to write any ML code to be trained in the cloud, using Vertex AI. Learn more. If you would prefer to learn how to train machine learning models directly in BigQuery with SQL, followed by MLOps with Vertex AI, then please instead use the notebooks in the above section for "BigQuery ML".

| Notebook | Description |

|---|---|

| vertex_ai/04_experimentation.ipynb | In this notebook, using the data in Vertex AI Feature Store that you previously ingested data into, you will train a model using xgboost in a local kernel, track hyperparameter-tuning experiments on Vertex AI, and deploy the model to an endpoint for real-time prediction. |

| vertex_ai/05_model_training_xgboost_formalization.ipynb | In this notebook, you will learn how to build a Vertex AI dataset, build a Docker container and train a custom XGBoost model using Vertex AI custom training, evaluate the model, and deploy the model to Vertex AI as an endpoint. |

| vertex_ai/06_formalization.ipynb | In this notebook, you will use Vertex AI Feature Store, Vertex AI Pipelines and Vertex AI Model Monitoring for building and executing an end-to-end ML pipeline using components. |

In your Google Cloud project, navigate to Vertex AI Workbench. To do so, you can either click on the link below, or search for "Vertex AI Workbench" in the search bar at the top of the Google Cloud console.

https://console.cloud.google.com/vertex-ai/workbench/

On the Workbench page, you should see a notebook instance has already been created for you.

00_environment_setup.ipynb

...helps you make the most of Google Cloud technologies. Our classes include technical skills and best practices to help you get up to speed quickly and continue your learning journey. We offer fundamental to advanced level training, with on-demand, live, and virtual options to suit your busy schedule. Certifications help you validate and prove your skill and expertise in Google Cloud technologies.

Lab Last Tested November 01, 2023

此内容目前不可用

一旦可用,我们会通过电子邮件告知您

太好了!

一旦可用,我们会通过电子邮件告知您

一次一个实验

确认结束所有现有实验并开始此实验