Before you begin

- Labs create a Google Cloud project and resources for a fixed time

- Labs have a time limit and no pause feature. If you end the lab, you'll have to restart from the beginning.

- On the top left of your screen, click Start lab to begin

Create Vertex AI Platform Notebooks instance and clone course repo

/ 20

Setup the data environment

/ 20

Aggregating Site Traffic by User with SQL

/ 20

Aggregating Site Traffic by Minute with SQL

/ 15

In this lab, you:

This Qwiklabs hands-on lab lets you do the lab activities yourself in a real cloud environment, not in a simulation or demo environment. It does so by giving you new, temporary credentials that you use to sign in and access Google Cloud for the duration of the lab.

To complete this lab, you need:

Click the Start Lab button. If you need to pay for the lab, a pop-up opens for you to select your payment method. On the left is a panel populated with the temporary credentials that you must use for this lab.

Copy the username, and then click Open Google Console. The lab spins up resources, and then opens another tab that shows the Choose an account page.

On the Choose an account page, click Use Another Account. The Sign in page opens.

Paste the username that you copied from the Connection Details panel. Then copy and paste the password.

After a few moments, the Cloud console opens in this tab.

For this lab, you will be running all commands in a terminal from your notebook.

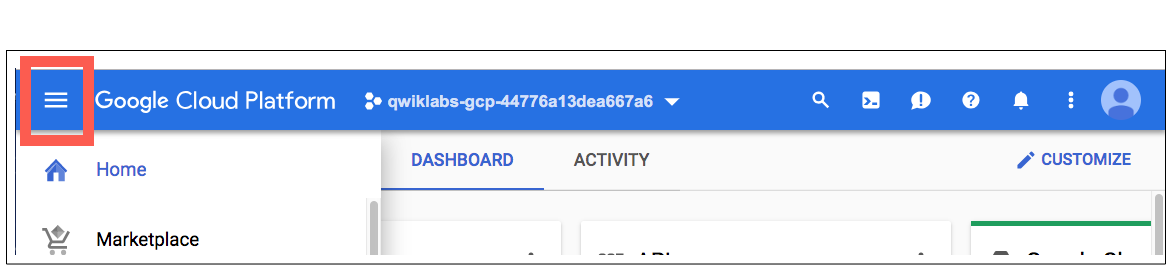

In the Google Cloud Console, on the Navigation Menu, click Vertex AI > Workbench.

Click Enable Notebooks API.

On the Workbench page, select USER-MANAGED NOTEBOOKS and click CREATE NEW.

In the New instance dialog box that appears, set the region to

For Environment, select Apache Beam.

Click CREATE at the bottom of the dialog vox.

Next you will download a code repository for use in this lab.

On the left panel of your notebook environment, in the file browser, you will notice the training-data-analyst repo added.

Navigate into the cloned repo /training-data-analyst/quests/dataflow_python/. You will see a folder for each lab, which is further divided into a lab sub-folder with code to be completed by you, and a solution sub-folder with a fully workable example to reference if you get stuck.

Click Check my progress to verify the objective.

In this part of the lab, you rewrite your previous BatchUserTraffic pipeline so that it performs the following:

CommonLog object.As in the prior labs, the first step is to generate data for the pipeline to process. You will open the lab environment and generate the data as before:

Before you can begin editing the actual pipeline code, you need to ensure that you have installed the necessary dependencies.

The script creates a file called events.json containing lines resembling the following:

It then automatically copies this file to your Google Cloud Storage bucket at

events.json.Click Check my progress to verify the objective.

training-data-analyst/quests/dataflow_python/4_SQL_Batch_Analytics/lab/ and open the batch_user_traffic_SQL_pipeline.py file.This pipeline already contains the necessary code to accept command-line options for the input path and one output table name, as well as code to read in events from Google Cloud Storage, parse those events, and write results to BigQuery. However, some important parts are missing.

As in the previous lab, the next step in the pipeline is to aggregate the events by each unique user_id and count pageviews for each. This time, however, you will perform the aggregation using SQL, using SqlTransform instead of Python-based transforms.

batch_user_traffic_SQL_pipeline.py, add the following import statement:query variable definition:#TODO. You will use this code to write a Transform to write raw data to BigQuery:Beam SQL can be implemented in both the Apache Calcite dialects (default) and ZetaSQL dialects. While both will be executed by Dataflow, we will be implementing ZetaSQL in this example, as it is similar to the language used in BigQuery and is also the dialect implemented in 'Dataflow SQL' — SQL queries authored directly in the Dataflow UI.

#TODO. Apply a SQLTransform using ZetaSQL Dialect using the following code:Navigate to Navigation Menu > Dataflow to see the status of your pipeline.

Once your pipeline has finished, go to the BigQuery UI to query the two resulting tables.

Make sure that logs.raw exists and has data populated, as you will need that later in the lab.

Click Check my progress to verify the objective.

In this part of the lab, you rewrite your previous BatchMinuteTraffic pipeline so that it performs the following:

CommonLog object and then adds a Joda Timestamp attribute to the object.In this task, you will add a Joda timestamp field to your CommonLog object, implicitly converting it to a generic Row object.

The appropriate imports and options have already been set to use ZetaSQL. A pipeline has been built with steps to ingest and write the data, but not to transform or aggregate it. In the file explorer of the IDE, navigate to training-data-analyst/quests/dataflow_python/4_SQL_Batch_Analytics/lab/ and open the file batch_minute_user_SQL_pipeline.py.

#TODO, add the formatted timestamp as a string:Note that when using the Python SDK, we currently cannot directly pass in objects of type datetime to a SqlTransform. Instead, we will convert the object into a string using strftime and then in SQL use the TIMESTAMP function.

ts to type TIMESTAMP and use it as the event timestamp for our fixed one-minute windows. The window_start field is generated by TUMBLE and is of type TIMESTAMP as well. Due to the issue we discussed earlier with the Python SDK, we must convert this field to a STRING before sending the resulting PCollection back to Python transforms.#TODO, apply a SQLTransform using ZetaSQL Dialect using the following code:In the Cloud Console, navigate to Navigation Menu > Dataflow to see the status of your pipeline.

Once your pipeline has finished, go to the BigQuery UI to query the resulting logs.minute_traffic tables. Alternatively, you can query from the terminal:

Click Check my progress to verify the objective.

When you have completed your lab, click End Lab. Google Cloud Skills Boost removes the resources you’ve used and cleans the account for you.

You will be given an opportunity to rate the lab experience. Select the applicable number of stars, type a comment, and then click Submit.

The number of stars indicates the following:

You can close the dialog box if you don't want to provide feedback.

For feedback, suggestions, or corrections, please use the Support tab.

Copyright 2022 Google LLC All rights reserved. Google and the Google logo are trademarks of Google LLC. All other company and product names may be trademarks of the respective companies with which they are associated.

This content is not currently available

We will notify you via email when it becomes available

Great!

We will contact you via email if it becomes available

One lab at a time

Confirm to end all existing labs and start this one